Building a secure Azure data platform

One common concern people often have with the cloud is security: Is moving business data – or any data in general – to the cloud from on-premises secure? The short answer is, yes, it is secure. Or in more detail: It is secure, often even more secure than on-premises, but that is assuming your data platform is designed and built with security in mind.

Fortunately Azure makes data security fairly straightforward by automating a lot of things such as data encryption and various user authentication methods, allowing us to focus on the big picture: Who can access our data, where it can be accessed from, how it can be accessed and so forth. In this post I will provide you with a quick introduction to various security concerns that should be considered when designing and building an Azure data platform.

The Azure data platform as whole is huge, of course, consisting of various database offerings (e.g. Azure SQL Server, Azure Synapse Analytics, Azure Cosmos DB, and Azure’s implementations for PostgreSQL, MySQL and MariaDB), Azure Storage, Azure Data Lake, Azure Data Factory, and more.

Each of these products has their own sets of security features that need to be considered when designing a data platform architecture. These features need to then be reflected against the business requirements of the data platform itself to ensure that, on one hand, the data remains secure, and on the other, the data platform remains fit to meet its requirements. These technical nuances aside there are some overarching security concepts with an Azure data platform that remain the same regardless of the Azure products your solution utilizes.

Who can access my data?

The first key concern with a data platform is determining who has access to the data, and what kind of an access do they have: Read, write, admin, or something else? To be more specific, we are not as concerned with naming particular people as we are with finding user roles and ways to authenticate users and authorize them to their specific roles.

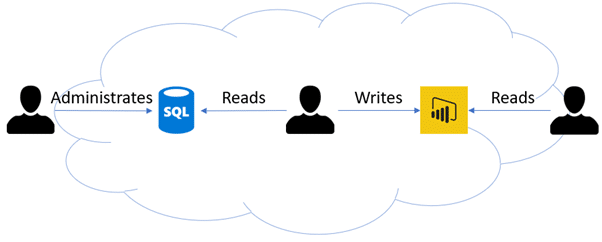

To give you a simplistic example, in a BI solution we at the very least have the roles of report reader, report designer and data administrator.

- The report reader, who also happens to be the end user of our solution, is content with having read permissions to the report itself.

- The report designer, on the other hand, should at least have read permissions to the underlying data source, and

- The data administrator needs to have full administrative permissions there.

Determining the user roles for a data platform helps us to further specify the authentication and authorization methods for our data platform components and the structure of our data. For example, if the platform contains an Azure Data Lake service with data intended for multiple different roles we should then organize the data in a manner which lets us assign role-based access based on the data’s intended target audience.

In such case we might have a set of data for people concerned with sales and another dataset for people from finances. In the case of Azure Data Lake one option among many could be to create security groups for these roles and then use Azure’s role-based accesses to authorize specific containers in the Data Lake.

With a data platform we also need to consider the end solution, whether it is a Power BI reporting solution, custom software, or something else entirely: How does the end solution access the underlying data stores, and how does the solution authenticate and authorize its users?

If the solution uses application permissions to retrieve or manipulate data, it also needs to be able to authenticate and authorize its users reliably. On the other hand, if the application uses the signed-in user’s identity when accessing data, we will be able to implement additional user-based security on the data storage level.

Where can my data be accessed from?

When people think of the cloud and its potential vulnerabilities, the most common concern is that it is available for everyone to see. After all, if your data is in the cloud it will be on the internet, outside the comfort of your company firewalls, right? Well, not exactly. Of course, Azure has its own set of firewalls which allow you to block traffic to and from various services. Azure SQL Server’s firewalls, for example, by default block all traffic coming from outside of Azure and you need to allow specific IP addresses in the firewall to make the database available for access.

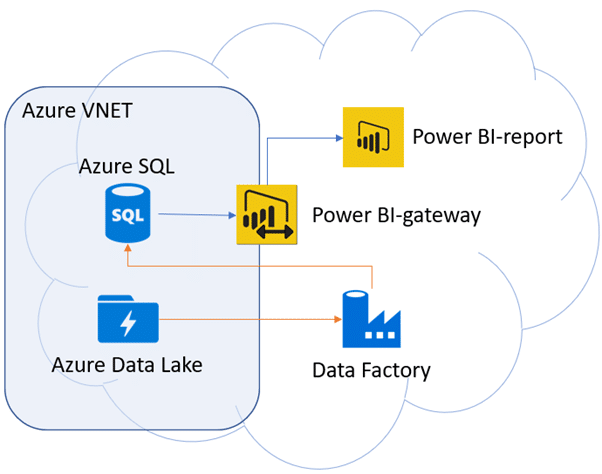

After that you might then ask: Well, if I open a specific IP address in the firewall, can’t the IP still be spoofed¬ by a potential attacker? Yes, it can, which is why you should also use strong authentication methods (such as Azure AD authentication with MFA enabled in the case of an Azure SQL Server), and preferably use Azure’s virtual networks (VNET) to connect to your data platform services without the use of public endpoints.

With the use of VNETs you can effectively hide your data platform from the internet and make it only available within Azure through Azure’s trusted services – or even only within the VNET itself. Connecting to a VNET-protected service from the public network would occur through a VPN tunnel installed on an Azure virtual machine that is also part of the VNET.

So, what are Azure’s trusted services? They are a set of services in Azure that Microsoft has deemed to be OK to access services inside VNETs unless they are explicitly blocked. For example, it is possible to access a VNET-protected Data Lake instance from Azure Data Factory assuming the Data Factory’s Azure-managed identity has proper permissions – regardless of whether the Data Factory is located in your own Azure subscription or is owned by some other organization.

With this in mind we need to ask ourselves: How likely is it that someone could crack the service level identity to abuse these trusted services, and as such, how relevant it is for us to block Azure’s trusted services from our VNETs. Which leads us to…

How secure is secure enough?

That is the question, isn’t it? When talking about security in IT there eventually comes a point where implementing more security means limiting the use case scenarios of your solution. Such as: Should you allow trusted Azure services to connect to your VNET-integrated Data Lake service? Saying “No” would make your data even more secure, but it would also limit your technical options for working with the data.

It is at these points that the difficult questions need to be asked: Is the environment already secure enough for our needs? Is the ability to enable all our scenarios more important than tightening our security? It is not uncommon to have one party in a company to advocate for more security while another is more concerned with the practical business use of the solution if security is improved to the maximum.

So: How secure is secure enough? At what point should we be content with the level of security present in our solution?

The answer is, of course, “it depends.” It mostly depends on what your solution is, and in the case of a data platform solution, what data your platform has, how important or sensitive the data is and how the data is being used. When dealing with truly sensitive data – such as personal information or national secrets – we can safely say that more secure is better.

On the other hand, for example with a public reporting service we can be fairly lax with read access to the data but any kind of write access can easily be very limited. And then we have any kind of business solutions that fall on the grey area of “We should be very strict with security, but, this solution also needs to be usable enough to produce value for our business”… This is often the point where it is important to sit down with all of the stakeholders for the solution and good security and data platform consultants to find out the ideal compromise for you.

The world of security is vast, and even when writing only about a very specific part of it (such as in the case of the Azure data platform) it is impossible to fit everything into one blog post. So in case you are interested in hearing more – perhaps you are in the process of planning building a cloud-based data platform of your own, or would want a security review for your existing data platform – do get in touch!

Text: Joonas Äijälä